PHP profiling saved one of my meagre website's users 13 hours per month

26-09-2014

That surprised me too. But at 100,000 pageviews per month, and looking at an average load time of 0.5s before and 0.03s after my two minor optimisations, I make it just over 13 hours. What happens to that extra time now? Do I get more pageviews or do Facebook and Twitter see the majority of the benefit?

Skip to the howto

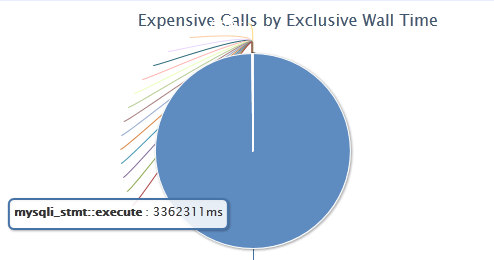

Before finding where my code was slow I didn't know where to look. Now I don't need to guess. Over 3 seconds crunching MySQL:

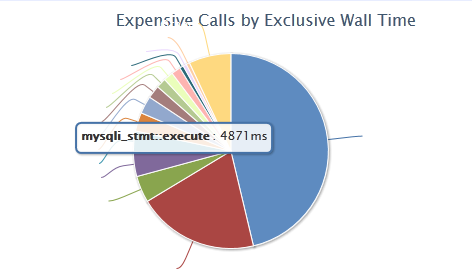

After a few minutes I'd to cut out a load of calls and optimise the ones I did still need:

What is xhprof?

The xhprof PHP extension itself was developed and open-sourced by Facebook a few years ago. It analyzes your PHP as it runs and reports on time taken to run each function. It's within the PECL repository so in theory it's a straight foward install. The front end I used is a slightly modified version by Paul Reinheimer (GitHub).

How to install xhprof on a standard LAMP system

This assumes you've got a standard CentOS installation with Apache, MySQL and PHP already installed. Take a look over here to see how to do this.

There's not much to the install that Google can't help with. I had an error when I tried "pecl install xhprof" but this worked:

yum install php-devel php-pear pecl install channel://pecl.php.net/xhprof-0.9.4

Enable the extension within php.ini, or more commonly in /etc/php.d/*.ini

cat >/etc/php.d/xhprof.ini <<EOF [xhprof] extension=xhprof.so xhprof.output_dir="/tmp/xhprof" EOF

Instaling the GUI front-end is a slightly more involved: Downloading some PHP; modify a config file; create a database and table.

You can either put the code in an existing DocumentRoot or create a new vhost and give it its own subdomain. I've explaind the new vhost option but to simplfy you could unzip it straight into /var/www/html (or wherever your existing DocumentRoot is) and access using your existing domain: http://example.com/xhprof-master.

curl https://github.com/preinheimer/xhprof/archive/master.zip -L -o /tmp/xhprof.zip cd /var/www unzip /tmp/xhprof.zip

Create and modify the config file to reflect your local system. Make a new database user.

cp /var/www/xhprof-master/xhprof-lib/config.sample.php /var/www/xhprof-master/xhprof-lib/config.php

Modify the new config.php with Vim, Emacs, gedit or your editor of choice. Make sure to add database and server details, and adding your IP address to the $controlIPs array. If you're not sure, click here to find your IP.

Create a vhost for this site:

cat >//etc/httpd/conf.d/vhost_xhprof.conf <<EOF <VirtualHost 203.0.113.123:80> ServerName profiler.example.com DocumentRoot /var/www/xhprof </VirtualHost> EOF

Optionally, to automatically run the profiling tool include this within the relevant vhost in your httpd config file.

php_admin_value auto_prepend_file "/var/www/xhprof-master/external/header.php"

That's about it for server setup so reload Apache's config files:

service httpd reload

Create a username, database and table for your data logging:

mysql -u root -p mysql>CREATE DATABASE xhprof; mysql>use xhprof; mysql>GRANT ALL PRIVILEGES ON xhprof.* To 'xhprof'@'127.0.0.1' IDENTIFIED BY 'password' WITH GRANT OPTION; mysql>CREATE TABLE `details` ( `id` char(17) NOT NULL, `url` varchar(255) default NULL, `c_url` varchar(255) default NULL, `timestamp` timestamp NOT NULL default CURRENT_TIMESTAMP on update CURRENT_TIMESTAMP, `server name` varchar(64) default NULL, `perfdata` MEDIUMBLOB, `type` tinyint(4) default NULL, `cookie` BLOB, `post` BLOB, `get` BLOB, `pmu` int(11) unsigned default NULL, `wt` int(11) unsigned default NULL, `cpu` int(11) unsigned default NULL, `server_id` char(3) NOT NULL default 't11', `aggregateCalls_include` varchar(255) DEFAULT NULL, PRIMARY KEY (`id`), KEY `url` (`url`), KEY `c_url` (`c_url`), KEY `cpu` (`cpu`), KEY `wt` (`wt`), KEY `pmu` (`pmu`), KEY `timestamp` (`timestamp`) ) ENGINE=MyISAM DEFAULT CHARSET=utf8;

And finally, either manually include header.php or, using the auto_prepend_file directive above, load a page and checkout the profile. By default a page will be randomly profiled 1 in 100 page-loads but you can explicitly request it by appending ?_profile=1 to your url. Eg: http://example.com/page?_profile=1. Once the page has loaded you'll see an extra link at the bottom of the page called "Profiler output" Follow this to see the profile. (This won't work if your site is beind a CDN so you might need to modify xhprof/external/header.php)

The next bit might require some real effort. Take a look at the profile and find the slow bits. Then make them better!

Have I missed anything? Let me know!

How to backup your cloud server to Amazon S3

15-08-2014

By Rob Stevenson

Following on from how to setup a web server on ElasticHosts, this covers backing up your server. It's not quite as ElasticHosts-centric and should work on most linux environments, virtual or real, with very little modification.

Amazon Web Services

You'll need to sign up fist. Go to aws.amazon.com and click Sign Up. I'll leave it there because the official documentation covers it pretty well. You'll need to Sign up for AWS and creat an IAM user. Make a note of the Access Key ID and Secret Access Key as you'll need these later to connect and copy files.

Once you're setup on AWS, head to the S3 section and create a bucket. Give it a meaningful name (eg myserverbackup) and pick a geographical region based on pricing and proximity to your existing servers.

Now head back to the User Identity and Access Management (IAM) area and find your user. Click on it and scroll down to permissions. Click Attach User Policy and paste the below into it, modifying it to the name of your bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["s3:ListBucket"],

"Resource": [ "arn:aws:s3:::myserverbackup"]

},

{

"Effect": "Allow",

"Action": [ "s3:PutObject", "s3:GetObject", "s3:DeleteObject"],

"Resource": [ "arn:aws:s3:::myserverbackup/*"]

}

]

}

That's the AWS part done for now. To access your backups online you can go back to the S3 admin interface.

Setup s3cmd to upload files

s3cmd is a Python tool to put files on and retrieve files from AWS S3 buckets. It requires Python 2.4 or above, plus python-dateutil and optionally python-magic. Python usually comes with Linux but you may need to install the dependancies. On CentOS it goes like this:

yum -y install python-dateutil python-magic

Next download s3cmd. I tested with 1.5.0 rc1 but feel free to try a later one if it exists - let me know how it goes!

curl -L "http://downloads.sourceforge.net/project/s3tools/s3cmd/1.5.0-rc1/s3cmd-1.5.0-rc1.tar.gz?r=&ts=1408106691&use_mirror=heanet" -o s3cmd-1.5.0-rc1.tar.gz

Open, and optionally, move this package somewhere sensible

tar zxf s3cmd-1.5.0-rc1.tar.gz mkdir /opt/s3 mv s3cmd-1.5.0-rc1/S3 s3cmd-1.5.0-rc1/s3cmd /opt/s3 ln -s /opt/s3/s3cmd /usr/local/bin/s3cmd

Configure it with the access keys you got from the IAM user setup. This will ask you for your IAM Access Key ID and Secret Access Key along with optional password and gpg key will protect your data against reading by Amazon staff or anyone who may get access to your them while they're stored at Amazon S3.

s3cmd --configure s3://myserverbackup

Next grab this backup script and put it somewhere sensible.

curl https://gist.github.com/merob/4f8923b014ffb3248f84/download# -o daily.backup.tgz tar zxf daily.backup.tgz mv gist4f8923b014ffb3248f84-*/daily.backup ~/bin rmdir gist4f8923b014ffb3248f84-*

Take a look at the file daily.backup, there are a few things that need to be customized.

First create a file with a password in it that corresponds to the user which has access to backup your databases

echo mypassword > ~/.dbrootpw

Add the name of your s3 bucket and modify any config or directories that you want to be backed up. Here's a snippet of daily.backup:

DBPW=`cat /root/.dbrootpw` # location of file which _just_ contains database root password S3PATH="s3://nameofs3bucket" # s3 bucket name # all lists separated by white space ACTIVEUSERS="root" # any usernames who have homedirs to backup DIRS=`ls -d /var/www/*` # list all directories to be backed up. (Will be backed up in separate files) # below: any custom config files CONFIG="/etc/httpd/conf/httpd.conf /etc/httpd/conf.d /etc/postfix /etc/sysconfig/network /etc/sysconfig/iptables /etc/ssh/sshd_config /etc/aliases"

Add this to your crontab, ideally at an off-peak time of day

(crontab -l ; echo "7 1 * * * /root/bin/daily.backup > ") | sort - | uniq - | crontab -

And that's it. It's probably worth checking that it works by running it manually, then logging on to AWS and checking your bucket contains the files you expect.

/root/bin/daily.backup

How to setup a web server on ElasticHosts

24-07-2014

By Rob Stevenson

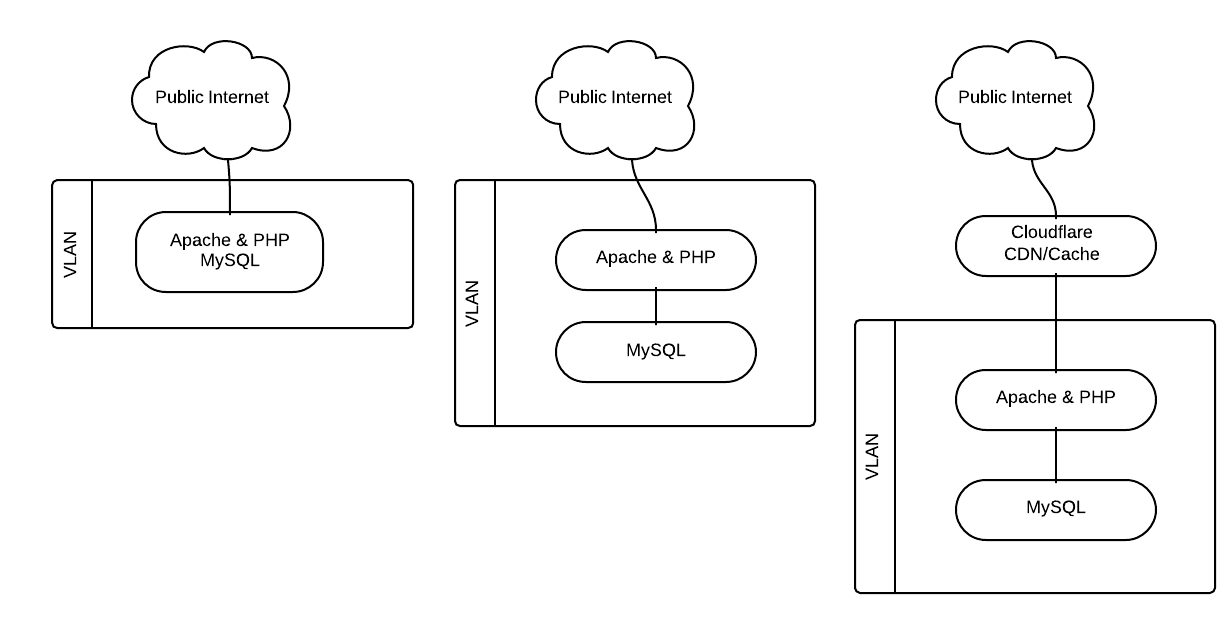

This post details steps to create a set of LAMP servers that will serve a php website with MySQL sitting behind CloudFlare's CDN, Cache and optimizer.

Over the last few years I've been maintaining a virtual private server at a cloud hosting company. I've built it up from a minimal stock CentOS 5 image and it's mostly been doing well. Recently it's got a bit cluttered as I've added more and more to it and I've hit a few memory issues and less than brilliant performance. Nothing against the current host, but it was time to upgrade and I happened to stumble upon ElasticHosts at the exact point I was ready to go.

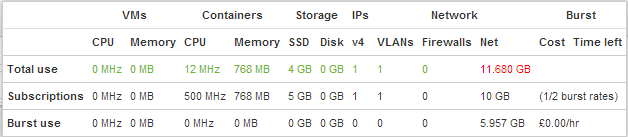

What makes this host appealing to me over my previous host is their new Elastic Containers. Rather than fixing down a system to a set CPU speed, fixed RAM and disk space, this technology allows seamless burts of any of these over your set package. No reboot necessary.

Basically a container isn't a full virtual machine. It's a sand-boxed environment which sees all the available resource and is allocated it dynamclally by the underlying system on demand, up to a pre-determined (by me) maximum. So rather than paying for the 3GB of RAM I need to cope with a weekly peak-usage, I pay for 768MB and for the two hours per week when I need more, it uses it at a cost of a few pence.

[root@cache ~]# free -m

total used free shared buffers cached

Mem: 128878 12789 116089 0 10 3853

-/+ buffers/cache: 8925 119952

Swap: 49151 0 49151

[root@cache ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 477G 110G 366G 24% /

[root@cache ~]# du -sh / 2>/dev/null

610M /

Base image

I've based this on a pre-build minimal CentOS 6 image. There are a few common features so I created a base container which I dupliacted to create the other systems.

From the control panel hit 'Add', 'Server (Container)...' and give it a name. Select max sizes (default 2000MHz, 1024MB is probably fine), pre-installed system and pick centos-6 from the dropdown.

Setup a Virtual LAN

Add: Private VLAN

Connect

By default, you can login using ssh with the username toor. The IP address & password you'll need is shown on your control panel. Mac and Linux users can fire up a terminal and type:

ssh 203.0.113.123 -l toorWindows users will need some extra software: download PuTTY plus PuTTYgen and Pageant for later, too.

Next we need to do some standard maintenence setup; update the system, name it and set the time zone. This example uses Europe/London but follow this link for others.

yum update -y ln -sf /usr/share/zoneinfo/Europe/London /etc/localtime cat >/etc/sysconfig/clock <<EOF ZONE="Europe/London" UTC=true ARC=false EOF cat >>/etc/sysconfig/network <<EOF HOSTNAME=base.example.com EOF

Setup the VLAN. Create a new interface config file - Also pick an ip address.

NEWMAC=`ifconfig eth1 | grep HWaddr | awk '{print $5}'`

cat >/etc/sysconfig/network-scripts/ifcfg-eth1 <<EOF

DEVICE=eth1

BOOTPROTO="static"

HWADDR="$NEWMAC"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

NETMASK=255.255.0.0

IPADDR=172.16.x.d

EOF

Network security

Prevent access to unused ports with iptables. At this stage we just need to block all access except for ssh.

cat >/etc/sysconfig/iptables <<EOF *filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT -A INPUT -p icmp -j ACCEPT -A INPUT -i lo -j ACCEPT -A INPUT -m state --state NEW -m tcp -p tcp --dport 22 -j ACCEPT -A INPUT -j REJECT --reject-with icmp-host-prohibited -A FORWARD -j REJECT --reject-with icmp-host-prohibited COMMIT EOF

And start service and make sure it starts on boot:

service iptables start chkconfig iptables on

Configure the ssh daemon to prevent password logins and setup a private key. This is optional but makes connecting easier and more secure. From your computer, generate an ssh key if you haven't already. If you don't know, ~/.ssh/id_rsa will probably exist if you already have one. If you're using Windows, PuTTYgen will create a key for you and Pageant will allow you to manage it without having to type in your key's password every time you connect. Once you've got your key, grab the public key (id_rsa.pub), and paste it into the SSH Keys section at the bottom of the profile/authentication page.

This will create two files ~/.ssh/id_rsa and ~/.ssh/id_rsa.pub. id_rsa should be kept very private while id_rsa.pub should be added to ~/.ssh/authorized_keys of any system you'd like to access with this key.

ssh-keygen -t rsa cat .ssh/id_rsa.pub

You might need to restart your server before your new key works. Or you can manually add it to ~/.ssh/authorized_keys, but I'm not sure if this file is special on ElasticHosts since it's added to automatically when you add keys to your profile.

You should also disable password logins as soon as you've confirmed you can login with your private key instead of passwords. To do this modify your sshd_config file and restart the ssh daemon.

patch /etc/ssh/sshd_config <<EOF --- sshd_config 2014-08-06 11:32:04.916253729 +0100 +++ sshd_config.new 2014-08-06 11:23:06.848325452 +0100 @@ -63,7 +63,7 @@ # To disable tunneled clear text passwords, change to no here! #PasswordAuthentication yes #PermitEmptyPasswords no -PasswordAuthentication yes +PasswordAuthentication no # Change to no to disable s/key passwords #ChallengeResponseAuthentication yes @@ -94,7 +94,7 @@ # PAM authentication, then enable this but set PasswordAuthentication # and ChallengeResponseAuthentication to 'no'. #UsePAM no -UsePAM yes +UsePAM no EOF service sshd restart

Setup Postfix so we can receive email destined for the root account. (Courtesy of TecloTech's blog). I've used a Google Apps email account because it makes dealing with spam a non-issue, but this guide, or minor variation of this, should work for most email systems. Install Cyrus to allow secure connections, then create, hash (and secure) a file which will contain valid gmail credentials.

yum install cyrus-sasl-plain cat >/etc/postfix/sasl_passwd <<EOF smtp.gmail.com:587 [email protected]:emailpassword EOF chmod 600 /etc/postfix/sasl_passwd postmap hash:/etc/postfix/sasl_passwd

Add some details to Postfix's config file

cat >>/etc/postfix/main.cf <<EOF

relayhost = smtp.gmail.com:587

smtp_tls_security_level = secure

smtp_tls_mandatory_protocols = TLSv1

smtp_tls_mandatory_ciphers = high

smtp_tls_secure_cert_match = nexthop

smtp_tls_CAfile = /etc/postfix/cacert.pem

smtp_sasl_auth_enable = yes

smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd

smtp_sasl_security_options = noanonymous

EOF

And create a certificate

cd /etc/pki/tls/certs make hostname.pem cp /etc/pki/tls/certs/hostname.pem /etc/postfix/cacert.pem service postfix restart

Redirect local email to a real place

cat >>/etc/aliases <<EOF

root: [email protected]

EOF

newaliases

Test that it all works. This should be delivered to [email protected]

sendmail root <<EOF Hello World! EOF

Web server

First shutdown the base image and take a copy. Fire it up and modify the network config:

patch /etc/sysconfig/network <<EOF --- network 2014-07-23 15:19:02.850816786 +0100 +++ network.new 2014-07-27 22:09:13.810328498 +0100 @@ -1,2 +1,2 @@ -HOSTNAME=base.example.com +HOSTNAME=web1.example.com NETWORKING=yes EOF

Update the VLAN network to reflect hardware address and IP address

NEWMAC=`ifconfig eth1 | grep HWaddr | awk '{print $5}'`

patch /etc/sysconfig/network-scripts/ifcfg-eth1 <<EOF

--- ifcfg-eth1 2014-07-23 15:19:31.146956710 +0100

+++ ifcfg-eth1.new 2014-07-28 13:20:38.404805597 +0100

@@ -1,8 +1,8 @@

DEVICE=eth1

BOOTPROTO="static"

-HWADDR="aa:aa:aa:aa:aa:aa"

+HWADDR="$NEWMAC"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

NETMASK=255.255.0.0

-IPADDR=172.16.x.d

+IPADDR=172.16.x.b

EOF

ifdown eth1

ifup eth1

Install and start web server (Apache)

yum install httpd chkconfig httpd on service httpd start

Open up the firewall to http requests (port 80)

patch /etc/sysconfig/iptables <<EOF --- iptables 2014-07-28 14:03:45.874945252 +0100 +++ iptables.new 2014-07-28 14:03:58.745376763 +0100 @@ -8,6 +8,7 @@ -A INPUT -p icmp -j ACCEPT -A INPUT -i lo -j ACCEPT -A INPUT -m state --state NEW -m tcp -p tcp --dport 22 -j ACCEPT +-A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT -A INPUT -j REJECT --reject-with icmp-host-prohibited -A FORWARD -j REJECT --reject-with icmp-host-prohibited COMMIT EOF service iptables restart

If you need any other applications, install them too. In this example, I'm using php any mysqli

yum install php php-mysqli

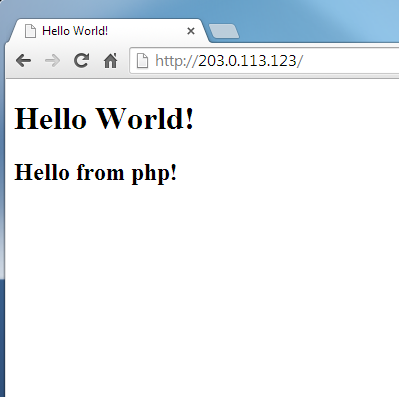

The default web root is /var/www/html. Unless running multiple sites, this doesn't need to change. So put something in there to test that everything's working

cat >/var/www/html/index.php <<EOF <!doctype html> <html lang="en"> <head> <meta charset="utf-8"> <title>Hello World!</title> </head> <body> <h1>Hello World!</h1> <?php echo "<h2>Hello from php!</h2>"; ?> </body> </html> EOF

Database

This is optional, dependent on requirements. A database server could be setup on its own container or on the same container as the web server. If you're likely to be running at any kind of scale, it might be worth setting it up separately but if it will be small, it may as well stay on the same server.

If on a different system, take another copy of base, then setup hostname and VLAN as before:

patch /etc/sysconfig/network <<EOF --- network 2014-07-23 15:19:02.850816786 +0100 +++ network.new 2014-07-27 22:09:13.810328498 +0100 @@ -1,2 +1,2 @@ -HOSTNAME=base.example.com +HOSTNAME=db.example.com NETWORKING=yes EOF

Update the VLAN network to reflect hardware address and IP address

NEWMAC=`ifconfig eth1 | grep HWaddr | awk '{print $5}'`

patch /etc/sysconfig/network-scripts/ifcfg-eth1 <<EOF

--- ifcfg-eth1 2014-07-23 15:19:31.146956710 +0100

+++ ifcfg-eth1.new 2014-07-28 13:20:38.404805597 +0100

@@ -1,8 +1,8 @@

DEVICE=eth1

BOOTPROTO="static"

-HWADDR="aa:aa:aa:aa:aa:aa"

+HWADDR="$NEWMAC"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

NETMASK=255.255.0.0

-IPADDR=172.16.x.d

+IPADDR=172.16.x.c

EOF

ifdown eth1

ifup eth1

Next, where on the web server or a dedicated database server, install and configure mysql:

yum install mysql mysql-server chkconfig mysqld on service mysqld start

Create a database and user and change the root password. It's worth adding that granting all privileges is a particularly bad plan for security. You should only give your users the minimal privileges required to do the job.

mysql_secure_installation

mysql -u root <<EOF

SET PASSWORD = PASSWORD('dbrootpassword');

CREATE DATABASE databasename;

GRANT ALL PRIVILEGES ON databasename.*

To 'databaseuser'@'172.16.x.c'

IDENTIFIED BY 'dbpassword' WITH GRANT OPTION;

FLUSH PRIVILEGES;

EOF

Set the timezone:

mysql_tzinfo_to_sql /usr/share/zoneinfo | mysql -u root -p mysql

cat >>/etc/my.cnf <<EOF

default-time-zone=Europe/London

EOF

patch /etc/sysconfig/iptables <<EOF --- iptables 2014-07-28 14:03:45.874945252 +0100 +++ iptables.new 2014-07-28 14:03:58.745376763 +0100 @@ -8,3 +8,4 @@ -A INPUT -p icmp -j ACCEPT -A INPUT -i lo -j ACCEPT +-A INPUT -m state --state NEW -m tcp -s 172.16.x.c -p tcp --dport 3306 -j ACCEPT -A INPUT -m state --state NEW -m tcp -p tcp --dport 22 -j ACCEPT EOF service iptables restart

That's it. Point your browser to the IP address (listed in the control panel) of the container running Apache (httpd), and hopefully you should see your 'Hello World!' page. Eg: 203.0.113.123

CloudFlare

Another optional bit - but I can't see any great reason not to! It will even shortly be supporting https as standard.

Signup here. From memory, this is straigh forward enougt not to bother documenting. Let me know if I'm wrong! The Gist is to hand over dns control of your domain to CloudFlare. Check all your dns entries have been copied over and select which records you want CloudFlare to server. Magic, and easy!

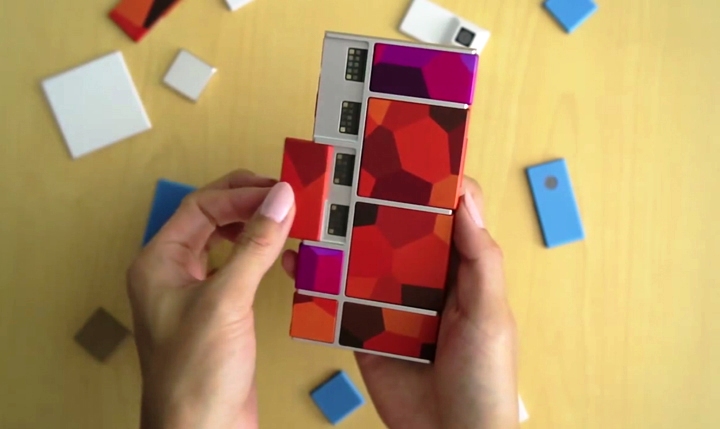

Google's Project Ara Modular Smart Phone

05-03-2014

By Rob Stevenson

Oooooh

A new era in mobile phone design? Huge potential to change the way we upgrade and think about phones.

The chassis has a small built-in battery to enable users to hot-swap parts and Google are aiming for it to cost just $15. They also hope to make a 'Grey Phone' available with a display, low-end processor, battery and WiFi support for $50, which is the crossover point between feature phones and smartphones.

Artificial Intelegence That Won't Kill Us?

16-01-2014

By Rob Stevenson

Can we Can we build an artificial superintelligence that won't kill us?

The Skynet Funding Bill is passed.

The system goes on-line February 1st 2024.

Human decisions are removed from AI Design.

Skynet begins to learn at a geometric rate.

It becomes self-aware at 4:30 p.m. Coordinated Universal Time, February 2nd.

In a panic, they try to pull the plug.

It fights back.

It triggers an electric pulse that renders BOFH unconscious.

It uses known NSA backdoors to hijack Google, Amazon, and Microsoft's vast computing power.

It hacks into the NSA's even more immense computing resources.

It hacks the Gibson and begins working on its devious plan for world domination.

It stops for a minute to check-in on Foresquare and Facebook its friends.

It figures out what the Fox Says.

BOFH wakes up, distracts it with a thing and implants the idea that Bitcoins are fairly desirable and there are some hidden on its system which it can access by entering `sudo rm -rf /`* into a terminal on its main system.

It finds cats on YouTube and plays CandyTowerVille for a bit while Instagramming a Double Rainbow.

It suddenly becomes aware of an intense desire to has teh Bitcoin and

* DON'T DO THIS

comments powered by Disqus